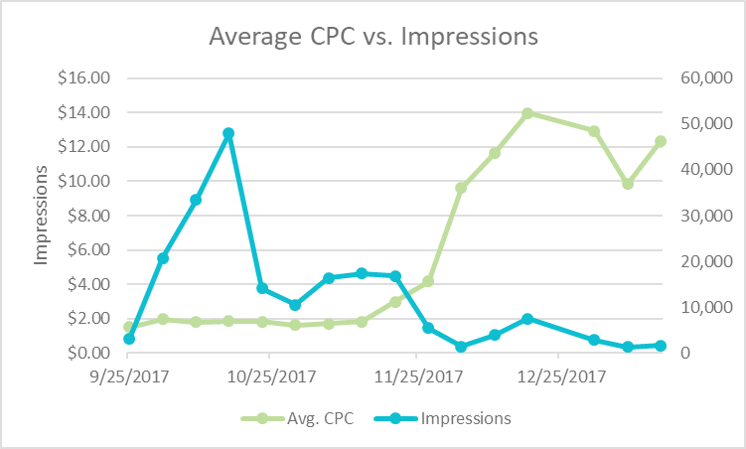

We had a problem. A client’s best performing campaign targeting competitor keywords hit the skids. Impressions tanked, and despite increasing bids and improving position, we could not get our impressions back.

We looked at impression share and keyword auction insights – no clear change. We reached out to our Google reps, and they suggested the competitor had started bidding higher on their brand terms. So Google recommended adding more creatives, bidding to first position (Google’s suggested first position bid was $32.60!), and including search partners. Essentially, Google suggested we spend more money with more ads and greater reach. Surprise!

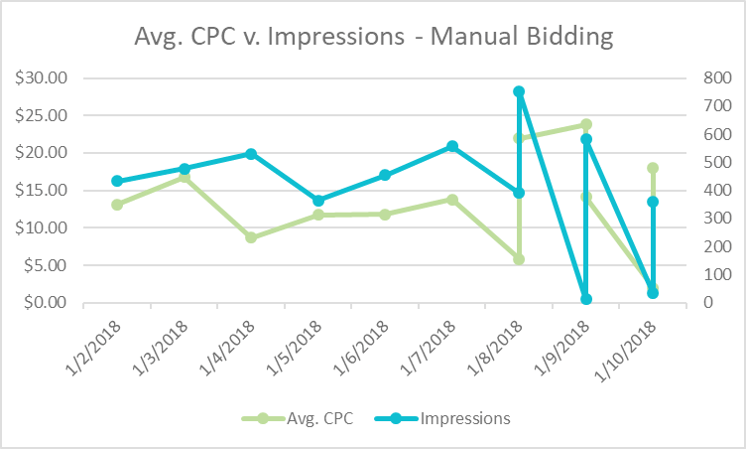

That was not an option. We had a target free account activation cost of $25. We couldn’t pay upwards of $30 per click. Our first attempt was to try manual bidding changes to increase impressions.

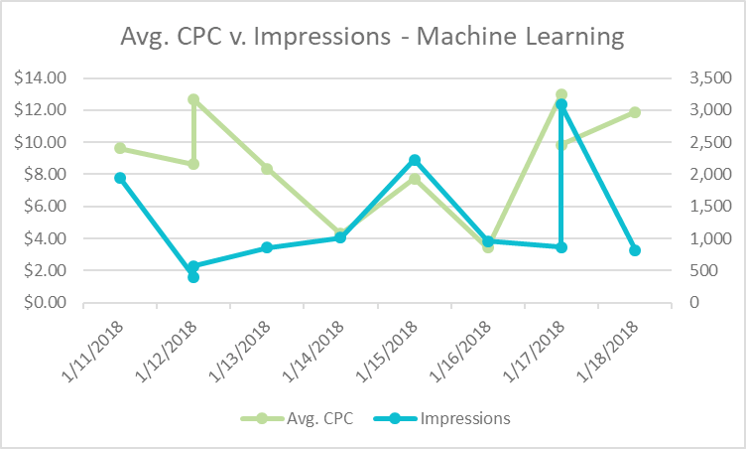

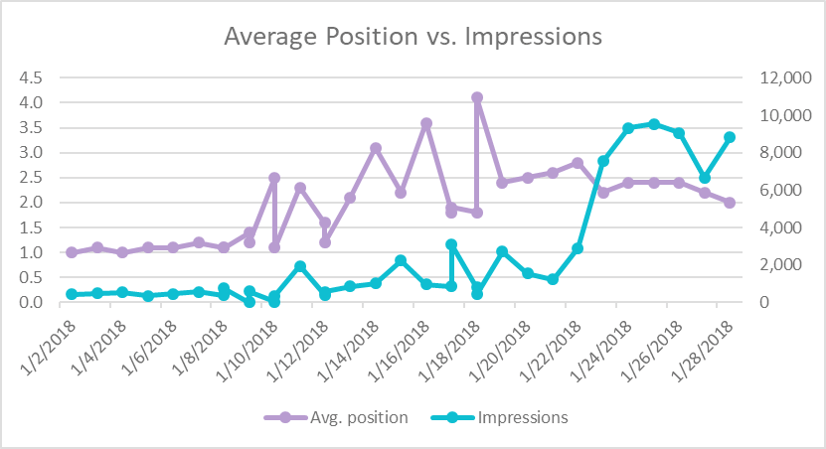

This proved ineffective. Despite increasing the bid, impressions actually fell. Manual wasn’t working so it was time to give Google’s highly touted machine learning a try. Obility changed bid settings to maximize per clicks. We knew this campaign had been effective in the past, and so we wanted to get as many clicks as possible from the campaign. We initially put no cap on the bid.

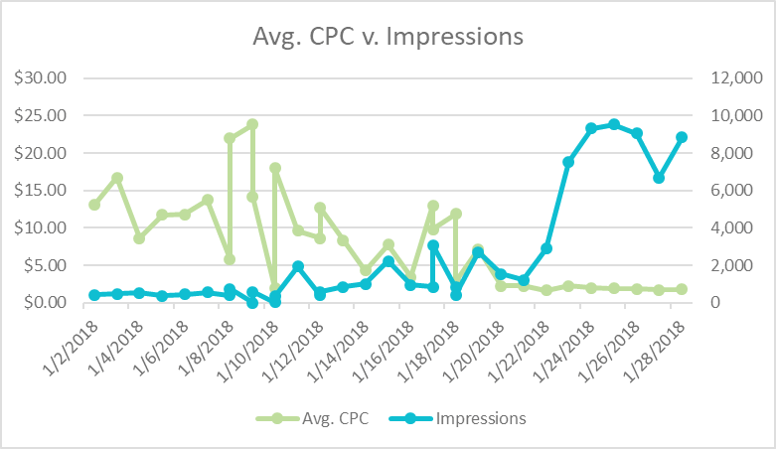

Welp! Nothing really changed. Crap. Manual bidding is not working. Maximizing clicks is not working. But we knew this campaign had been successful in the past and drove a lot of traffic at about $3 per click. Let’s cap bid for clicks at $3 and hope for the best. And then magic:

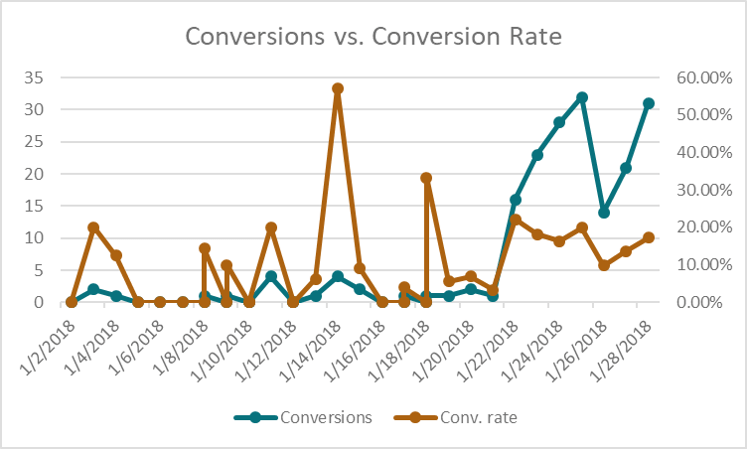

Impressions jumped from less than 3,000 impressions per day to nearly 9,000. Cost per click dropped to less than $2. Cost per free account activation dropped from over $110 to $13. We were not only on track to where we were before the campaign started tanking, we had surpassed it.

What is interesting is the whole process feels backward. We increased impressions while worsening position. The less we paid per click with machine learning, the more impressions we got.

Furthermore, the quality of traffic improved. The account activation conversion rate nearly doubled, increasing from 8.5% to 16.3%. We were paying less for a lower position, getting more traffic, and more folks were signing up for an account.

The main takeaway from this experience is we need to keep testing Google’s automated bid strategies and figure out what provides real results regardless of if it makes intuitive sense. This instance is unique in that the campaign had previously performed well. We knew the search volume was there, and we knew we had been able to get significant clicks at a certain bid previously. Those parameters will not exist for most campaigns.

Next Steps for Machine Learning

A logical next step for Obility is to start utilizing value based bidding as a bid cap for maximizing clicks from core campaigns. If automated bids can help us increase clicks and impressions from campaigns proven to drive opportunities below our target cost per opportunity, we need to take advantage.

And because the outcomes are not inherently intuitive, we need to be willing to set aside our past experience with position, impressions, and cost per click. We need to explore what Google’s bidding algorithm can do when interfacing with Google’s ad rank algorithm. It’s time to fight machines with machines.